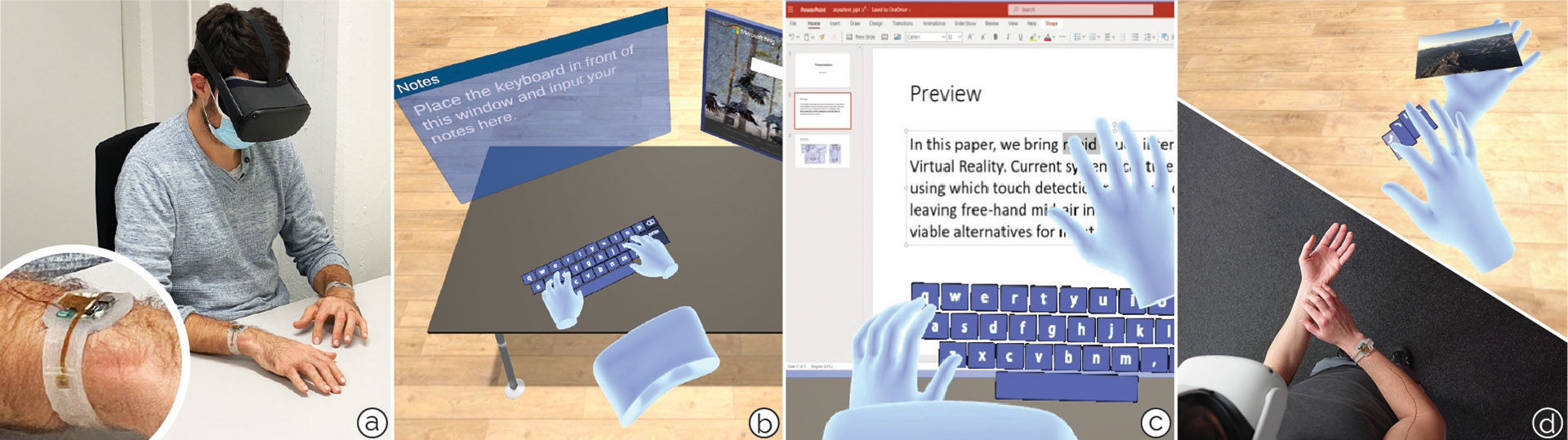

TapID

Rapid Touch Interaction in Virtual Reality using Wearable Sensing

IEEE VR 2021 (Best demonstration award)Abstract

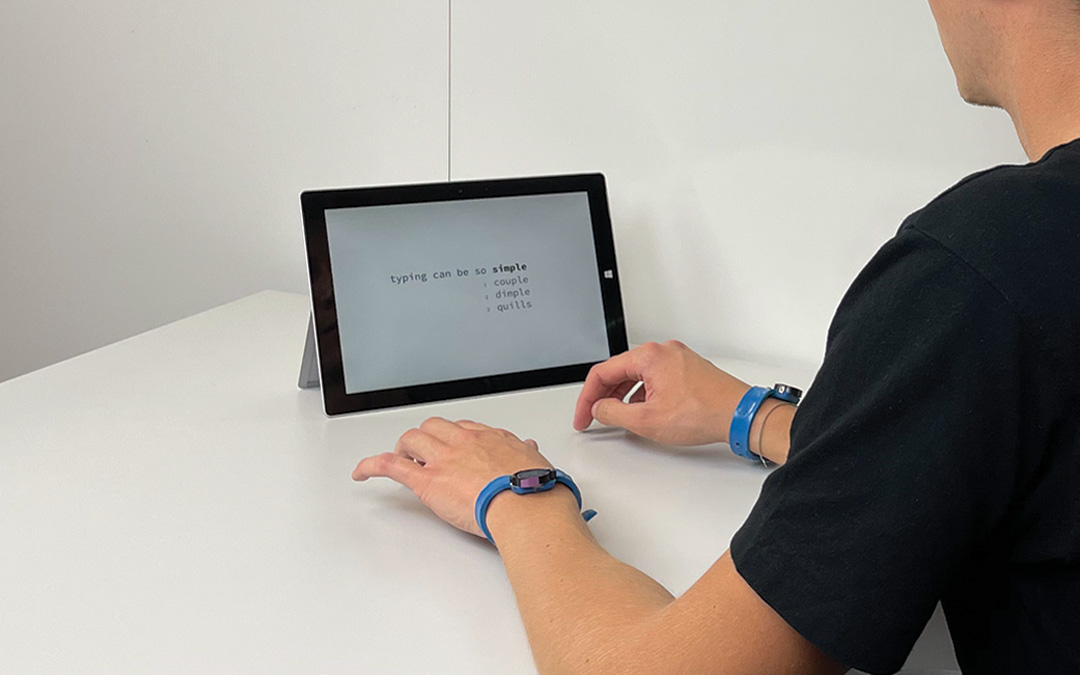

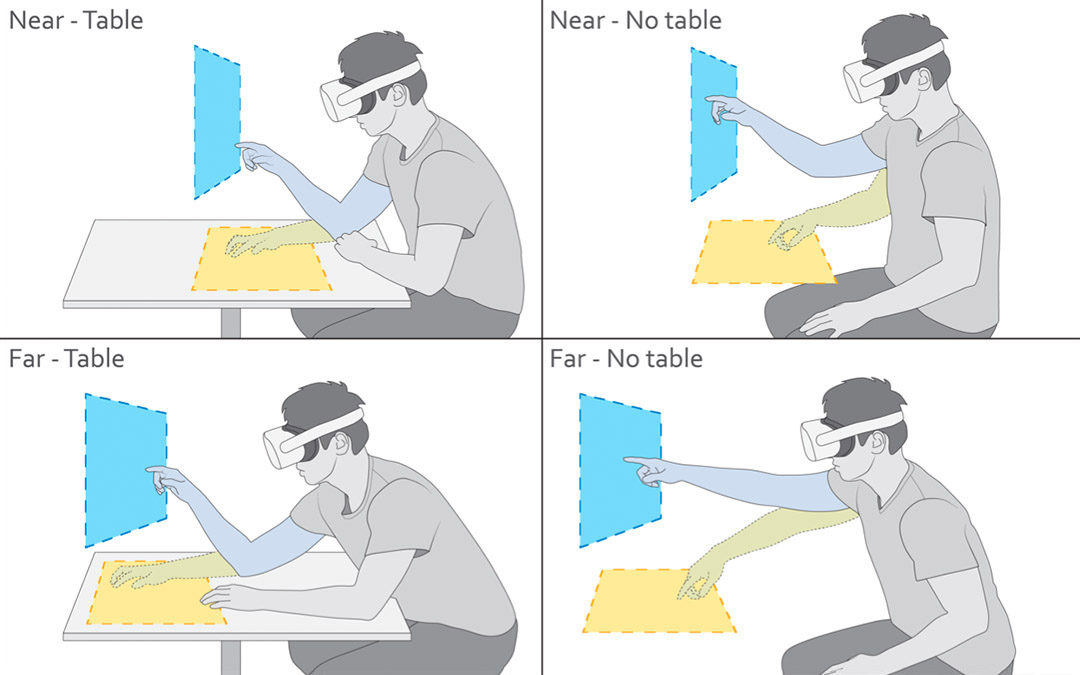

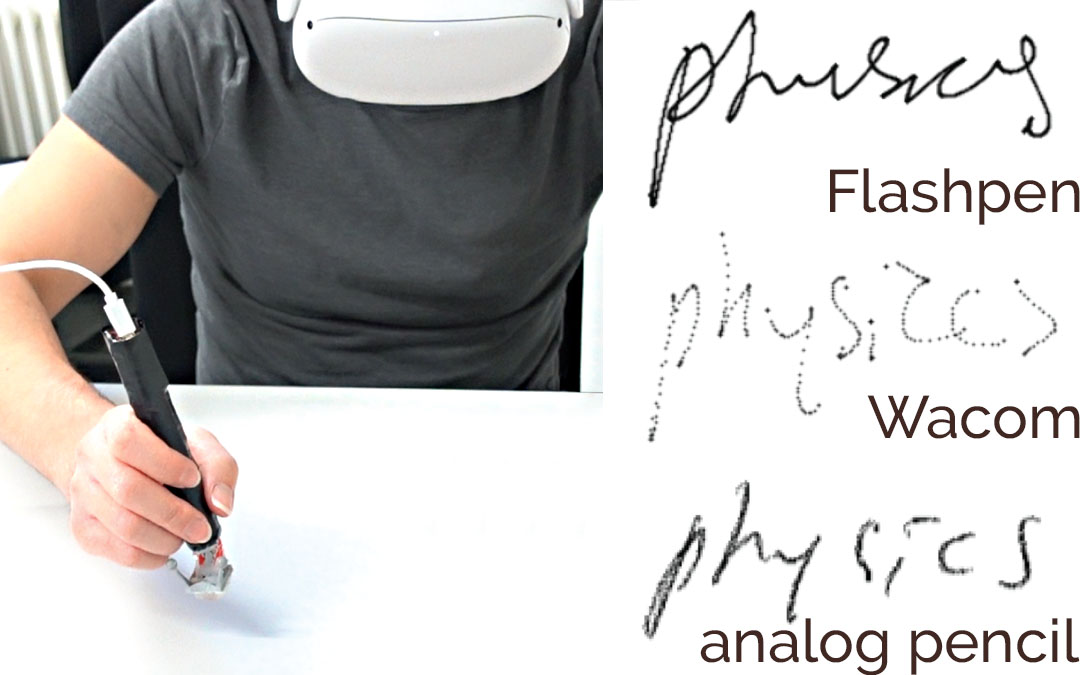

Current Virtual Reality systems typically use cameras to capture user input from controllers or free-hand mid-air interaction. In this paper, we argue that this is a key impediment to productivity scenarios in VR, which require continued interaction over prolonged periods of time—a requirement that controller or free-hand input in mid-air does not satisfy. To address this challenge, we bring rapid touch interaction on surfaces to Virtual Reality—the input modality that users have grown used to on phones and tablets for continued use. We present TapID, a wrist-based inertial sensing system that complements headset-tracked hand poses to trigger input in VR. TapID embeds a pair of inertial sensors in a flexible strap, one at either side of the wrist; from the combination of registered signals, TapID reliably detects surface touch events and, more importantly, identifies the finger used for touch. We evaluated TapID in a series of user studies on event-detection accuracy (F1 = 0.997) and hand-agnostic finger-identification accuracy (within-user: F1 = 0.93; across users: F1 = 0.91 after 10 refinement taps and F1 = 0.87 without refinement) in a seated table scenario. We conclude with a series of applications that complement hand tracking with touch input and that are uniquely enabled by TapID, including UI control, rapid keyboard typing and piano playing, as well as surface gestures.

Video

Reference

Manuel Meier, Paul Streli, Andreas Fender, and Christian Holz. TapID: Rapid Touch Interaction in Virtual Reality using Wearable Sensing. In Conference on Virtual Reality and 3D User Interfaces 2021 (IEEE VR, Best demonstration award).